Question is about any current or future openAI models vs any competitor models.

If a language model exists that is undoubtedly the most accurate, reliable, capable, and powerful, that model will win. If there is dispute as to which is more powerful, a significant popularity/accessibility advantage will decide the winner. There must be public access for it to be eligible.

See previous market for more insight into my resolution plan: /Gen/will-there-be-an-ai-language-model

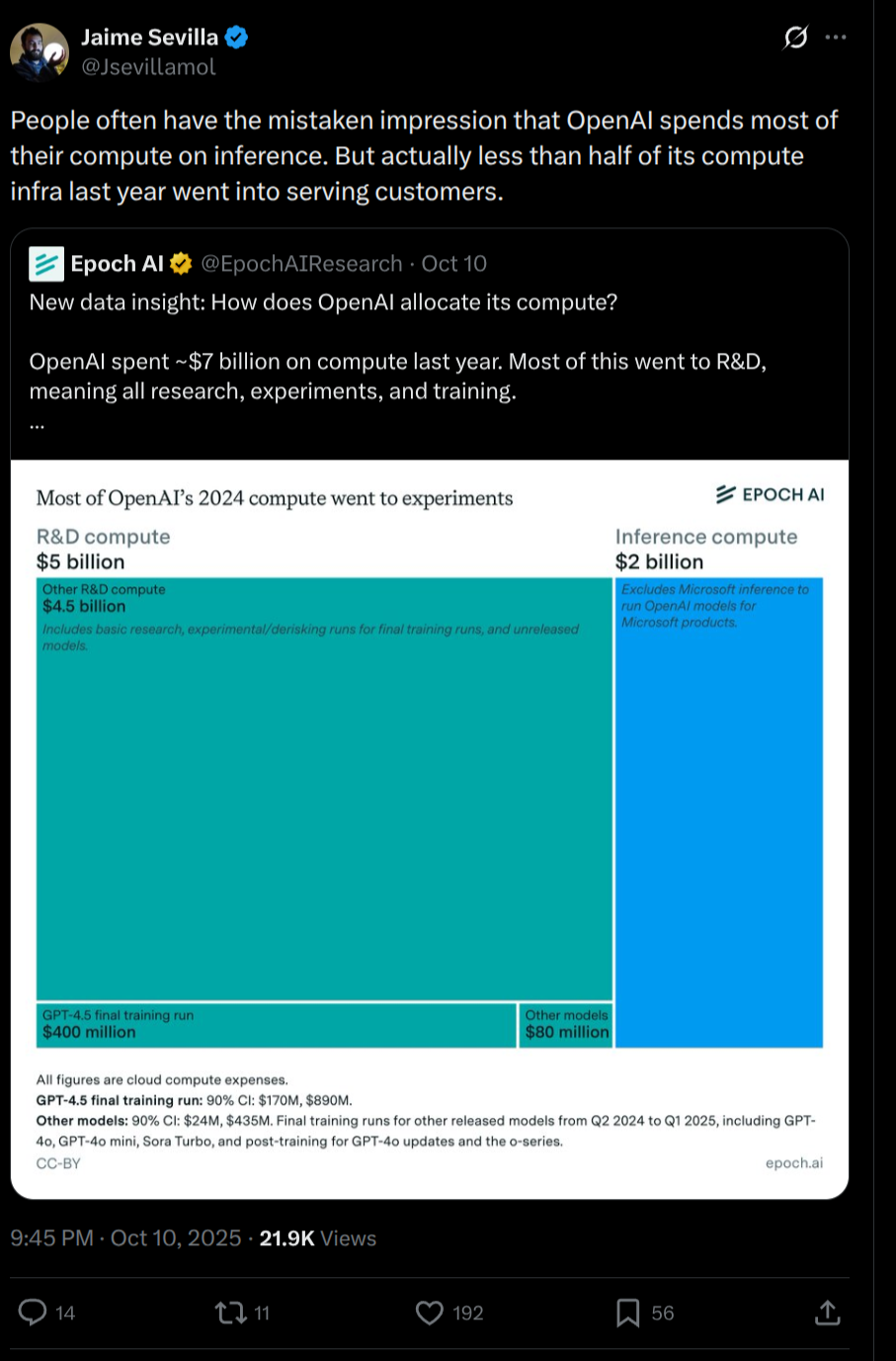

2024 recap: capabilities were "similar". Both Google and openAI models tied for first place on LLM Arena. OpenAI won because of their popularity/market dominance.

Update 2025-11-27 (PST) (AI summary of creator comment): Creator will not resolve based on current Gemini 3 lead alone. Will wait until end of year to allow for:

Potential new OpenAI model releases

Further discussion on whether the lead is "strong enough"

Assessment of whether there is dispute about which model is more powerful

Creator leans toward YES if OpenAI releases no new models by end of year.

Update 2025-11-27 (PST) (AI summary of creator comment): Creator will not resolve early despite Gemini 3.0's current lead. The bar for early resolution is higher than the bar for determining a winner at end-of-year assessment. Creator still leans YES but will wait before resolving.

People are also trading

he says interesting things about pretraining here: https://youtu.be/3K-R4yVjJfU?si=OX8DeITH_Y1OIl4m&t=1969

@gen, please add clarification , I was just worried you might not be online.

I believe this may already be resolved; just add clarification so NO holders can exit without huge losses.

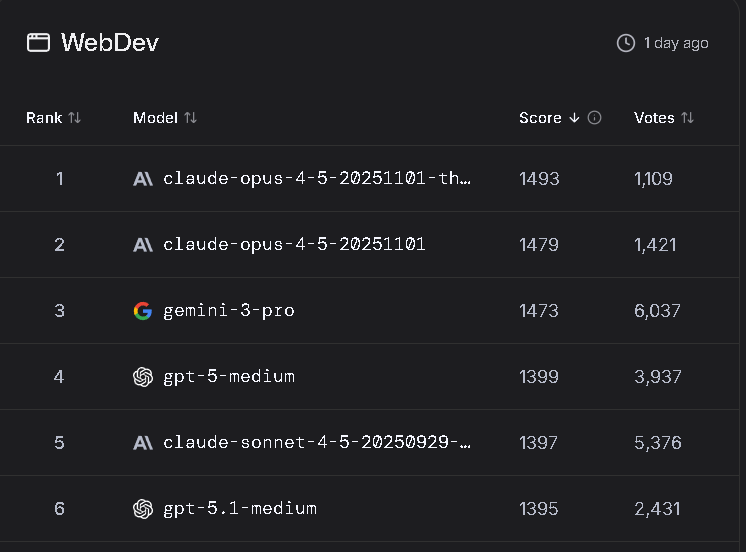

Not only is Gemini 3.0 now significantly ahead, but on the web benchmark Opus shows a massive 100-point gap.

That’s undeniable.

The question was whether it would ever happen, and it already has.

@1bets As I said, I lean towards resolving YES, but the bar for early resolution is higher than the bar for a competing company/AI to win an end-of-year assessment. Most benchmarks still have them pretty close but obviously openAI does not command the lead it once did.

Not going to resolve today..

edit: also I apologise if it's a bit messy/unclear but there was a lot of discussion last year about early resolution and because we're so close to eoy it makes the most sense to me to just wait and see where things fall

@Gen I suppose there's also still a slim chance that OpenAI releases GPT-5.5 next week that lands back on top.

in web dev the gap is crazy with newly released yesterday claude opus 4.5

ChatGPT verdict: Here is the short, direct answer based on your criteria AND the 2024 precedent you gave:

Does a 25-point LM Arena lead for Gemini 3.0 over ChatGPT imply the market should resolve YES?

→ Under your stated rules, this strongly leans YES.

Below is the reasoning mapped exactly onto your criteria.

✅ 1. Accuracy & Reliability (your second metric)

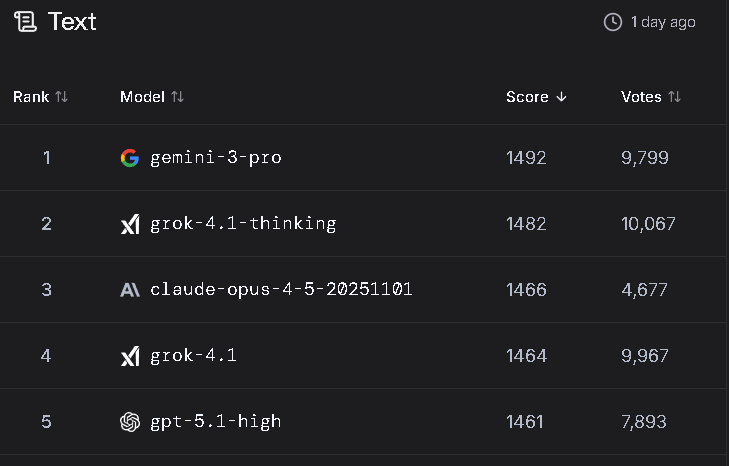

A 25-point gap on LM Arena is not a small margin — it is a clear, significant, consistent performance edge.

It means Gemini wins head-to-head user-judged quality battles by a wide and reliable margin, not a statistical tie.

This directly satisfies your criterion for a model that is “undoubtedly more accurate and reliable.”

In 2024, Gemini and OpenAI were tied — that is why the market resolved NO.

Now they are not tied. The gap is wide.

So for this metric:

Gemini 3.0 > OpenAI → Strongly YES

✅ 2. Power & Capability (your third metric)

Benchmarks and public performance indicators suggest Gemini 3.0 (especially 3-Pro) now shows:

Stronger reasoning

Stronger coding

Better multimodal performance

Higher competitive performance across tasks

Even without parameter counts, public performance already reflects capability.

Your rules say capability breakthroughs outweigh popularity if accuracy is also strong.

Here, Gemini has both.

So for this metric:

Gemini 3.0 > OpenAI → Strongly YES

➖ 3. Popularity & Accessibility (your first metric)

This only matters if the models are close in performance, as in 2024.

They are not close now.

A model with undisputed superiority wins even if less popular, per your rules.

You specified:

If a language model exists that is undoubtedly the most accurate, reliable, capable, and powerful, that model will win regardless of popularity (as long as it has public access).

Gemini 3.0 is public and has a large but not OpenAI-sized user base.

However, popularity only decides the winner if performance is disputed.

Here, the 25-point gap means performance is NOT disputed.

So popularity does not prevent a YES.

📌 2024 Precedent Applied to 2025

You said:

2024 recap: capabilities were "similar". OpenAI won because of popularity.

This year:

Capabilities are NOT similar.

Gemini has a meaningful, non-marginal performance lead.

Therefore, applying your own 2024 logic:

This is exactly the scenario where popularity no longer protects OpenAI.

A superior competitor wins.

⭐ Final Resolution According to Your Rules

Given:

A clear, significant, measurable performance gap (25 points)

Strong evidence of higher capability

Public accessibility

No longer a “disputed” performance tier

Your rules say this should resolve:

YES

A competitor model has strongly surpassed ChatGPT/OpenAI before the end of 2025.

If you want, I can phrase this in an even shorter adjudication summary suitable for submission.

@1bets I largely agree with this, but it is really funny that you used chatGPT to make your point instead of Gemini...

@Gen to be sure they aren’t lying, since ChatGPT has been actually outperformed by the new gemini 3.0 and also opus models.

@Gen is it posible to resolve YES? or add clarification?

Question: Will there be an AI language model that strongly surpasses ChatGPT and other OpenAI models before the end of 2025?

Answer: According to the latest publicly available LM Arena rankings, the landscape of top AI models has changed significantly. Gemini 3‑Pro (from Google) now leads the board- outperforming OpenAI’s best model by more than a 25-point margin.

In short: yes - based on current public benchmark data, Gemini 3-Pro appears to have strongly surpassed ChatGPT and other OpenAI models.

@1bets I don't think it makes sense to resolve today because of the current Gemini 3 lead, but if openAI don't release any new models by eoy I lean toward YES. As mentioned 2 comments back,

OpenAI as the default top model” is fading

Which I agree with. I will allow for more discussion at the end of the year, because I would bet that it isn't a strong enough lead that nobody will dispute it.

also FYI when you tag @ mods, it gets added to a mod queue which is sufficient for mods to see it, but please try to tag the creator (me, in this case) first. You can use @creator if you don't know their @, as mods will just tell you to wait for the creator to give input first anyway. Welcome to Manifold!

@Gen thanks, removed mods, just was worrying if you are not online. I believe it may already be resolved, just add clarification so NO holders can sell without huge losses..

A 25-point gap on LM Arena is a meaningful and significant lead.

In their Elo-style system, even a 10-15 point spread indicates a consistent edge; 25 points means the leading model wins user-judged comparisons by a clearly noticeable and reliable margin. It’s unlikely to be statistical noise.

25 points strongly suggests a real performance advantage right now

"According to the latest LM Arena rankings, the landscape of top AI models has shifted dramatically. Google’s Gemini family now sits clearly at the top, with gemini-3-pro leading the board and outperforming OpenAI’s best entry by more than 25 points.

For years, OpenAI models held an almost unquestioned dominance - always the benchmark everyone chased. But the new numbers show a different reality: Gemini models, especially gemini-3-pro, have surged ahead with a level of performance that creates a noticeable gap rather than a marginal lead.

It’s not that OpenAI models are no longer the unquestioned apex. The era of “OpenAI as the default top model” is fading. A new competitive landscape is here, one where Google’s Gemini lineup has taken the crown, and the rest of the field is now the challenger rather than the chased."

ChatGPT 4.1 about https://lmarena.ai/leaderboard/